Autolume Student Projects

The following student projects were created as part of the IAT460 course at SIAT, SFU, during the Spring 2025 semester.

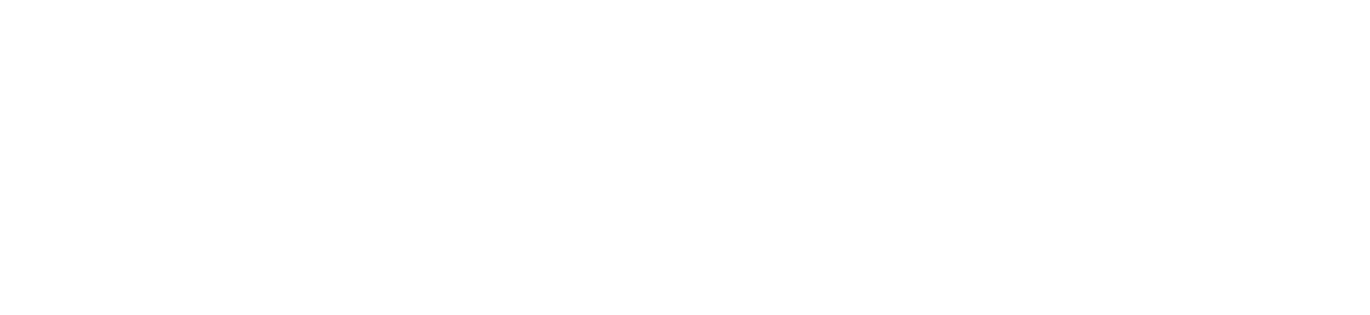

Wake Bender

Nathan Choo

Wake Bender is an interactive experience combining motion tracking and generative AI to simulate the manipulation of ocean wakes. This work showcases the potential of move- ment-based interaction alongside generative outputs from specialized AI systems in the media of spontaneous motion visuals and spectacle.

AutolumeGallery: Exploring GAN Latent Space with Virtual Reality

Robin de Zwart

AutolumeGallery is an interactive virtual gallery space whereusers can manipulate and explore the latent space of a GANin 3D. A canvas receives artwork generated in real-time fromAutolume-Live, influenced by the position and rotation ofseveral objects in the virtual environment. Users can use these“manipulators” to alter the piece and take an active role in itscreation and appearance. The GAN model was trained on theabstract artwork of my late grandfather, Peter John Voormeij,as my way of honouring and connecting with his work.

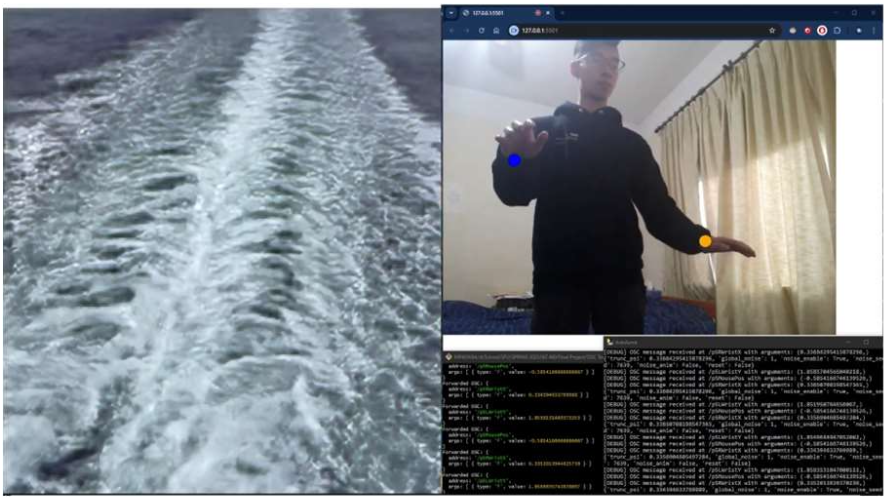

AstroGANesis: Real-time GAN Exploration with Autolume in an Interactive Game

Ge Liu

This paper presents AstroGANesis, an innovative interac-tive system merging gameplay with generative AI art explo-ration. The experience enables players to navigate a space-craft through a pseudo-volumetric display using Leap Mo-tion gesture control while simultaneously exploring a GAN’slatent space. As players pilot their craft in 30-second game-play sessions, their spatial coordinates directly influence theGAN’s latent vector parameters, creating a dynamic, genera-tive background that visually represents their journey throughthe model’s conceptual space. By coupling intuitive flightmechanics with real-time GAN output, AstroGANesis trans-forms abstract AI concepts into tangible visual experiences,making generative AI more accessible and comprehensibleto general audiences. This work extends interactive machinelearning paradigms while providing insights into effectivemethods for transparent LED screen stacking and optimiza-tion for multi-layer rendering in game engines.

Hidden Palate: Generative AI as a Lens on Food Aesthetics and Waste

Cassandra Nguyen

Hidden Palate is a generative art project that explores the intersection of AI, food aesthetics, and sustainability by leveraging AI as a co-creator in reimagining food waste narratives. Using a layered, phase-based training approach, the project employs a carefully curated dataset combining imperfect produce, flowers, and vintage botanical illustrations.This methodology allows the model to generate outputs that challenge conventional beauty standards in food, transforming the visual language of produce into abstract, evolving forms that evoke reflection on societal perceptions of imperfection. Technically, the project demonstrates how small, high-quality datasets can be ethically leveraged through generative models,promoting creative exploration over realism. Through this creative process, the project positions AI not as a tool for mere replication, but as a partner in co-creation, opening up possibilities for interactive installations, educational media, and cross-disciplinary collaborations in unexpected ways.

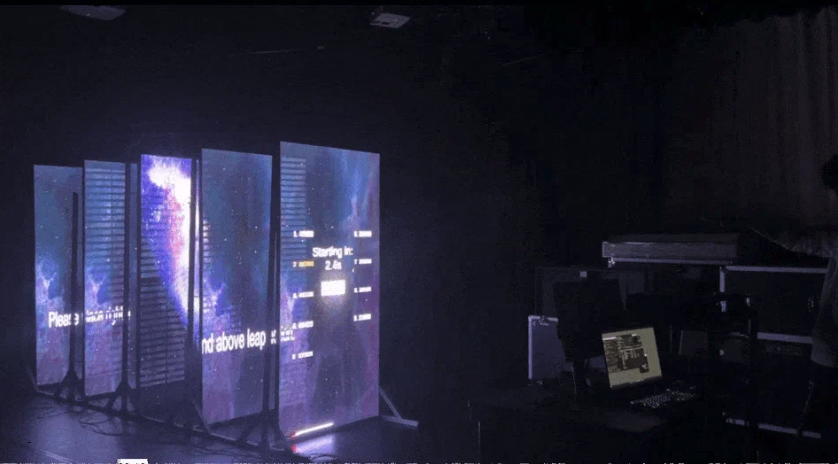

Gesture-Driven Immersive Generative Art Gallery: Extending Autolume to VR

Bill (Di) Zhao

We present an interactive virtual reality (VR) art gallerythat integrates a generative adversarial network (GAN) vi-sual synthesizer with immersive gesture-based user interac-tion. Building on Autolume, a no-code GAN platform forartists, our system brings artificial intelligence (AI)-generatedimagery into a Unity-driven VR environment in real time. Weintroduce a Python bridge that converts each AI-generatedimage into a 3D heightmap, allowing the visuals to becomesculptural elements in the gallery space. Users can influencethe content and form of the gallery through intuitive VR ges-tures, effectively co-creating art in specific places. Specifi-cally, users use one hand to select frames and the other tomanipulate images: horizontal hand movements change theGAN’s seed, vertical movements adjust diversity parametersfor subtle variations, and forward-backward motions togglebetween 2D imagery and 3D sculptural forms. This paperdetails the design and technical architecture of the system,situates it in the context of related work in VR art and cre-ative AI, and discusses findings from preliminary user inter-actions. Our results demonstrate a novel immersive experi-ence in which the audience transitions from passive viewer toactive participant, highlighting creative and technical impli-cations for the future of AI art in virtual environments.

Dreams in Space: An AI Art System Blending Neuro-Architecture and Dreamcore Aesthetics

Bingmiao Zheng

This paper presents “Dreams in Space,” an audio-reactivegenerative AI art system that synthesizes principles from neuro-architecture with dreamcore aesthetics. The system employs aStyleGAN2 model trained on a custom dataset of imagescombining neuro-architectural elements with dreamcore motifs togenerate surreal architectural spaces. A novel audio analysisframework maps musical features to latent space dimensions,creating dynamic visual representations that respond to music inpsychologically meaningful ways. The primary innovation lies inestablishing connections between audio features and spatialcharacteristics known to trigger specific cognitive and emotionalresponses. Unlike previous AI art systems that focus solely onvisual aesthetics, this approach generates environments thatpotentially influence viewer experience through evidence-basedneuro-architectural principles.